The art and science of predicting a college basketball season

Sports Illustrated’s College Basketball Projection System is a collaboration between the economist Dan Hanner and SI’s Luke Winn (with assistance in 2016 from SI’s Jeremy Fuchs) that generates our player forecasts and 1–351 preseason rankings by simulating the season 10,000 times. As our preview coverage kicks off, we wanted to offer a closer look at three aspects of the projection model:

• Which types of players, according to its historical data, are most likely to be efficient starters

• How the model ranks teams 1–351

• Why we think the model has produced the nation’s most accurate preseason projections for each of its two years of existence

1. The players most likely to be efficient offensive starters

On offense, our model projects each player’s efficiency and shot volume based on his past performance, recruiting rankings, AAU advanced stats (a new addition in ‘16–17), development curves for similar Division I players over the past 14 seasons, the quality of his teammates and his coach’s ability to develop and maximize talent. It then places each player in context of his team’s rotation and projects his percentage of minutes played.

The historical data we’ve gathered from the past 14 seasons provides a unique window into which players become efficient starters. For the purposes of this column, we’ll define “efficient starter” as someone who plays at least 50% of available minutes and has an adjusted (for shot volume and defensive schedule strength) offensive rating better than 110, or 1.1 points per possession. These are the types of players most likely to reach that level—and thus become valuable, better-than-D-I-average offensive starters:

Type of Player | Percentage of Time Became Efficient Starter | 2016-17 Example |

|---|

You probably don’t need a projection system to tell you that efficient veteran stars—the prime examples this season being Duke’s Grayson Allen and Valparaiso’s Alec Peters—are the surest and rarest commodities in college hoops. But it’s also worth noting that they only repeat as efficient starters 86% of the time, with injuries, eligibility issues, roster and coaching changes, and the general unpredictability of college players accounting for the 14% failure rate.

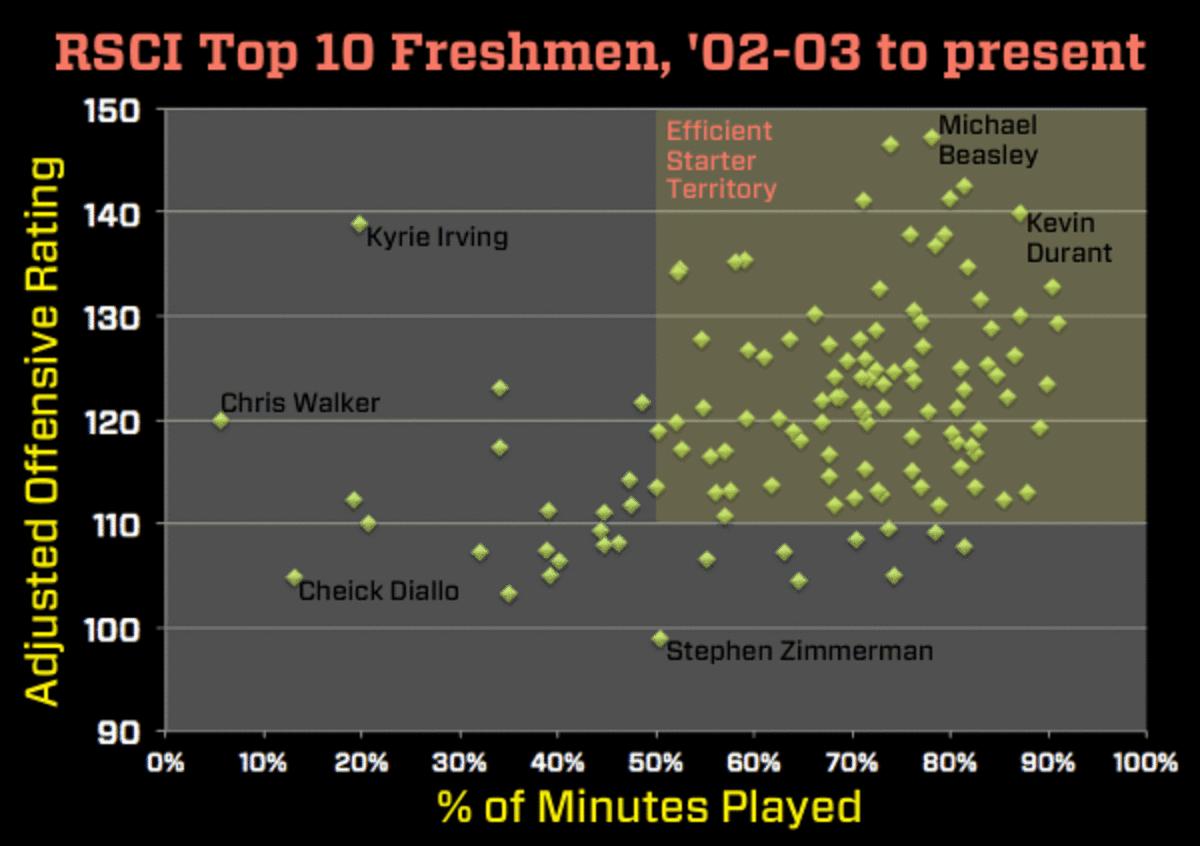

Coming off a season in which Kansas’s Chieck Diallo barely got off the bench, Kentucky’s Skal Labissiere struggled and UNLV’s Stephen Zimmerman posted the worst adjusted offensive rating by a top-10 freshman in our 14-year database, there might be an inflated sense among fans and the punditry that top-10 recruits can be risky assets. The reality is that they’re the next-best thing to have after an elite veteran star. Players ranked in the Recruiting Services Consensus Index’s top 10 turn into efficient starters 76% of the time. Here’s the past 14 years of top-10 recruits plotted according to adjusted offensive rating and minutes played:

(This should be obvious, but elite freshmen can be limited by more than poor play. In the chart above, Chris Walker was ineligible for his first semester at Florida in 2014–15, and Kyrie Irving’s value was sapped by injuries for most of his one season at Duke.)

What many fans don’t realize is how quickly the quality of freshman performance drops off as one moves down the rankings. Recruits in the 51–100 range tend to arrive with plenty of hype, but they’ve only played at efficient-starter level 13% of the time as freshmen. The majority of those prospects don’t become efficient starters until their sophomore seasons. There’s always hope, though, that an ignored or late blooming prospect will render his recruiting ranking void. Approximately 200 D-I teams compete with almost entirely two-star recruits, and 2% of them have turned out to be efficient starters as freshmen.

Coaches have long viewed junior-college transfers as quick fixes for roster holes, and the better juco recruits do tend to have more of an instant, quality impact (an 18% success rate in the chart above) than do recruits outside the top 50. But rarely do jucos debut as elite offensive players, such as the way power forward Chris Boucher did for Oregon last season. For the vast majority, their impressive juco stats just haven’t translated to the higher level of competition.

When it comes to lesser veterans, it’s remarkable how infrequently a player who was highly inefficient (with a sub-100 offensive rating) in starter minutes turned into a valuable starter the following season. That only happened 11% of the time. The forecast is even worse for benchwarmers hoping to make the leap. Players who’ve received less than 20% of available minutes the previous season only became efficient starters 3% of the time.

(One final note: The table likely overstates the probability of success for juco players and two-star recruits, because these players are more likely to not play a single minute—and when that happens, they don’t show up in the historical data.)

2. How we rank the teams 1–351

Due to the large amount of variance in college players’ performances, we project the range of possible outcomes for each player on a team, then simulate the season 10,000 times, taking a different draw for each player, each time. We then rank teams based on their median outcome from all the simulations.

Our process begins by projecting a player’s tempo-free stats (usage rate, offensive rating, and assist, rebound, block and steal rates) based on recruiting rankings, advanced stats from Nike’s EYBL AAU circuit (when applicable), a player’s past college stats, and development curves for similar D-I players over the past 14 years. Then we make adjustments based on a player’s teammates; Duke’s Frank Jackson, for example, would be an aggressive, high-usage scorer if projected in a vacuum, but he’s a limited shooter in our projections due to him playing alongside Allen and potential freshman stars Jayson Tatum and Harry Giles III.

The next step is to adjust those advanced stats based on their coach—how well players tend to develop under that coach, and how optimally their coach deploys his talent. (These coaching factors matter a great deal; for more on them, see the study we published in March looking at the past 10 years of offensive coaching performance.) Once we arrive at our final advanced-stats profile for each player, we project playing time based on a combination of the player’s past performance, the quality of his teammates, his coach’s substitution tendencies, and human intel we’ve gathered on likely rotations—both from our own scouting and from team sources. Then we add up the value of all the players on the team to get an overall offensive efficiency estimate for the team.

Our defensive efficiency projections are based on a hybrid system that combines a coach’s defensive history, his team’s recent defensive performance and advanced-stat projections at the player level. For teams that return most of their rotation, the previous year’s defensive performance is assigned a lot of weight. For teams that return a moderate number of players, we use last year’s defensive performance and adjust by comparing the rebounding, steal and block rates of the outgoing players to the predictions for the players that will see more playing time. And for teams with a mostly new rotation, we put more weight on a coach’s defensive performance throughout his career and less weight on the previous season.

We also account for the fact that teams have little control over their opponent’s three-point percentage and free-throw percentage. Teams that were hurt by opponents making a high percentage of threes and free throws last season are projected to bounce back on defense, since most of this is viewed as bad luck.

Height also matters for the defensive projection, particularly at center. For example, we project that UCF’s 7' 6" Tacko Fall will play more this season, and by funneling more drivers (and shots) in Fall’s direction, we expect UCF to make a meaningful defensive improvement. Similarly, the graduation of UC Irvine’s 7' 6" Mamadou N’Diaye leads us to project that its defense will fall off significantly.

The final step is to combine the offensive and defensive efficiency projections for each team to create a projected margin-of-victory (MOV) figure—and teams are then ranked 1–351 based on that number.

3. The most accurate projections for two seasons running

In the interest of transparency, here are a few facts about the projection system’s performance:

• The Associated Press preseason top 25 is always the most popular human projection, and although our 2015–16 forecast mostly agreed with the AP poll at the top, we did have some key differences. SI’s projections claimed that Vanderbilt, Wisconsin, LSU, Baylor and Butler weren’t top 25 teams—as the AP voters had them—and none of those teams finished in the top 25 according to margin of victory. SI also projected three top-25 teams that poll voters left out: Miami, Xavier and Louisville. Our model was far too bullish, however, on Georgetown (whose best players regressed) and San Diego State.

• After our system’s debut season of 2014–15, statistician Ken Pomeroy’s analysis concluded that we had the most accurate preseason projections for that year. Following the 2015–16 season, we reviewed all available 1–351 predictions, both from human experts (CBS) and computers (ESPN, kenpom.com, TeamRankings.com), and believe that our model was once again the most accurate.

Our method of judging performance was to compare each model’s projected preseason rankings with the final rankings on kenpom.com, which we believe is the best assessor of overall, season-long team strength. The first set of numbers in the comparison chart below is absolute error, or the sum difference between each team’s preseason ranking and its final kenpom.com ranking. (SI had the smallest absolute error.) The second set of numbers is the average number of spots each projection model improved upon a hypothetical model that just used the final rankings from the previous season as its preseason predictions. In this case, SI’s model had an average improvement of 13.1 spots per team, compared to 10 for the other computer models (kenpom, TeamRankings, ESPN) and 7.6 for the human ranking (CBS).

Ranking | SI | KenPom | TeamRank | ESPN | CBS |

|---|

Here are some notable teams and where SI.com’s projection fared better than the other computer approaches:

Team | 2015–16 Final MOV | SI | KenPom | TeamRankings | ESPN |

|---|

Some of these were cases where the SI’s player-by-player projection model held some advantage over formulas that focus largely on returning percentage of minutes from the previous season. Wisconsin’s uncharacteristic reliance on multiple, non-ranked freshmen led our model to be more skeptical about the Badgers. The model identified the volume of sub-replacement-players in Rutgers’ rotation and correctly forecasted the Scarlet Knights as the worst team in any major conference. Our coaching-based adjustments also helped us be ahead of the curve on teams such as Xavier, Virginia Tech and Houston. (Kelvin Sampson, for example, had top-30 offenses in six of his seven seasons at Indiana and Oklahoma between 2002–08, so the model forecasted him to improve Houston’s offense . . . which went on to finish 21st in efficiency last season.)

This isn’t to say that SI’s forecasts were perfect, or even close to perfect. We had some projections that turned out to be far worse than those of the other computer formulas:

Team | 2015–16 Final MOV | SI | KenPom | TeamRank | ESPN |

|---|

In the case of Auburn, our coaching-based adjustments forecasted a Bruce Pearl breakthrough based on his extensive success at Tennessee, but that hasn’t happened yet with the Tigers. UNLV had multiple highly ranked recruits whose production and development lagged well behind national norms, and neither Georgetown nor Maryland saw its essential players improve upon previous seasons, as their development curves suggested would happen. St. Joseph’s, meanwhile, had some unprecedented, individual breakthroughs in efficiency—namely Isaiah Miles morphing from a sub-replacement-level starter as a junior to a star as a senior—that caused its offense to improve from 257th to 26th. That was one of many reminders that when you’re dealing with subjects as unpredictable as 18–24 year-old basketball players, there are things that even the most accurate projection system cannot see coming.