How Major League Baseball Adopted the Save—and Changed the Game Forever

This story appears in the June 3, 2019, issue of Sports Illustrated. For more great storytelling and in-depth analysis, subscribe to the magazine—and get up to 94% off the cover price. Click here for more.

By and large, baseball’s mainstream counting stats are self-explanatory, tangible events that look exactly like they sound. Anyone can see a home run, a walk, an RBI. There might be some fuzziness around the edges—you can quibble with the logic behind the pitching win—but the criteria are still straightforward. These numbers are clear, or as clear as they can be. They’re the building blocks of both the game and the boxscore.

And then there’s the save.

More an interpretative definition of an act than an act itself, and only possible under specific criteria, the save has not simply measured relievers’ performances. It’s shaped them. After Major League Baseball officially recognized the statistic in 1969, the save began to influence teams’ approach to relief pitching—“one case of baseball statistics actually changing strategy,” as Alan Schwarz wrote in his history of the sport’s stats, The Numbers Game. The “save situation” entered the lexicon, and it dictated the terms of use for closers. It created an entirely new standard by which to judge relievers, and, in turn, a new motivation for teams to pay them. Fifty years after its birth, the save has made millionaires and revolutionized relief.

Which makes it all the more remarkable that the save was born only after years of trial and error, by way of a battle between baseball writers and teams, out of a sea of competing definitions. Over the last decade, arguments against the stat have become more popular than ever, as teams have begun to acknowledge that the best time to use the best reliever isn’t always the one to result in a save—but debates over the save’s efficacy have been around from the very beginning, and there’s a clear line between the ones from half a century ago and the ones of today. The save introduced an arbitrariness previously unseen in mainstream baseball statistics, and it, quite literally, changed the game.

To the extent that most baseball fans know anything about the birth of the save, they know this: Jerome Holtzman, a beat writer in Chicago, invented the stat in 1959, and baseball adopted it as an official statistic in 1969. This version isn’t wrong—but it is incomplete, just one piece of a saga that included years of dramatic rule changes and briefly threw the scorekeeping system into chaos. “What is a save?” was not first asked by Holtzman in 1959, and it certainly was not last answered by MLB in 1969.

In a general sense, “save” has been used to describe a quality effort by a reliever for just about as long as baseball has had relievers—which is to say, just about as long as modern baseball has existed. The first recorded mention of the term was in 1907; it appears in Ty Cobb’s memoir from 1915. But a “save” was only a loose idea rather than a statistic, and a “reliever” was typically just a starter entering a game between his regularly scheduled appearances. This changed over the decades, and by the late 1930s it was not unusual to see full-time dedicated relievers. Yet as they grew more popular, it became clear that there was no adequate way to measure the value of their work.

Enter baseball’s first full-time statistician, Allan Roth. Brooklyn Dodgers president Branch Rickey, sensing an opportunity to gain an edge with a dedicated mathematician in the front office, had hired him in the ’40s. In 1951, Roth set about tracking the team’s relievers, and he came up with the first formal definition of the save: Any non-winning relief pitcher who finished a winning game would be credited with one, no matter how large his lead. If the team won, and he finished the game, he’d earn a save.

The system was imperfect—had a reliever “saved” anything if he entered with a double-digit lead?—but the basic concept began to spread to other teams, to reporters, and to pitchers themselves. From the beginning, the metric was linked to a reliever’s earning potential. “Saves are my bread and butter,” Cubs reliever Don Elston told The Sporting News in 1959. “What else can a relief pitcher talk about when he sits down to discuss salary with the front office?”

Roth began to share his definition with the media in the late ‘50s, and before long, the save made its first major evolution. In 1960, the stat had a new formula, a new architect, and a new principle to prove.

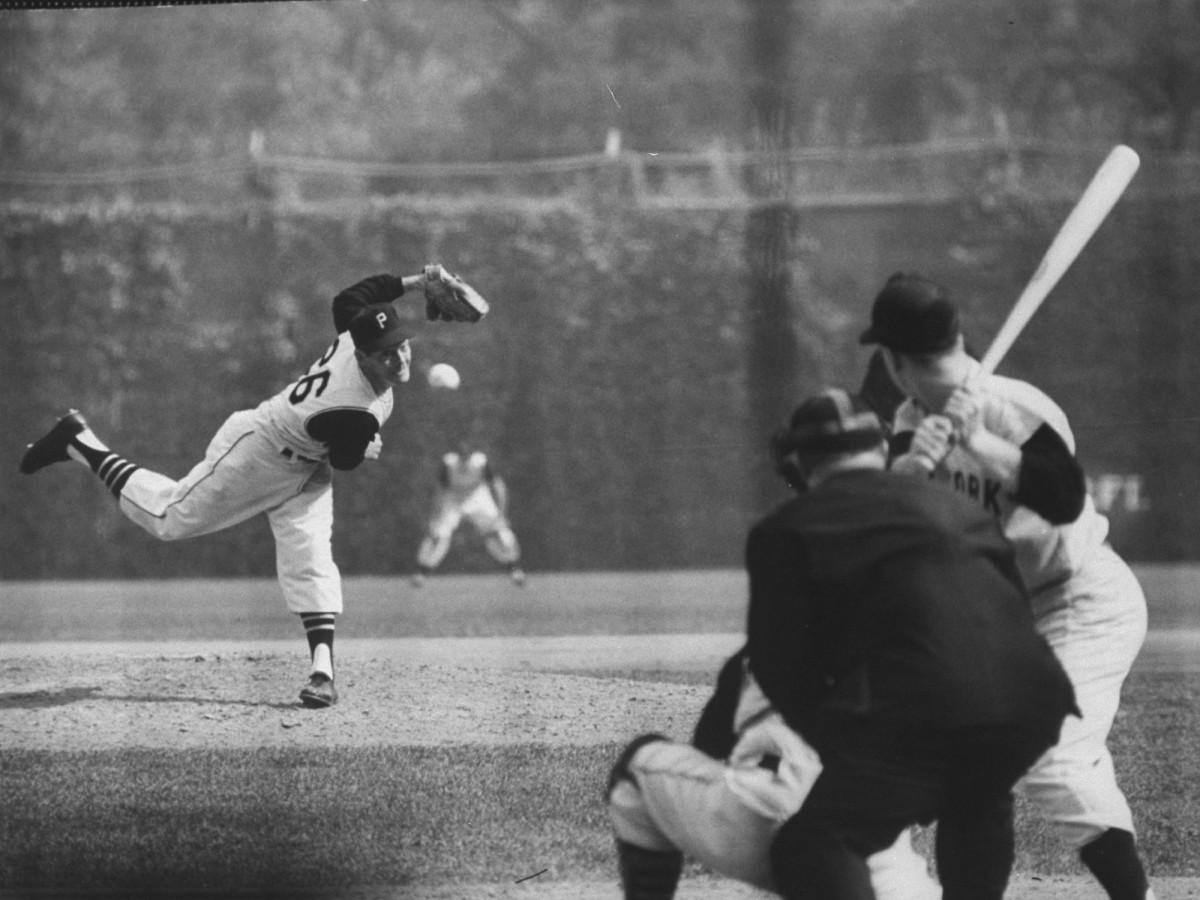

Holtzman, a Cubs beat writer for the Chicago Sun-Times, had spent the 1959 season watching Elston and teammate Bill Henry, and he suspected that they were among the best relievers in baseball. However, a different pitcher was getting the attention: Pirates reliever Elroy Face, who had gone 18–1 and been rewarded with a seventh-place finish for NL MVP. There was just one problem, Holtzman figured: Face hadn’t actually been that good.

“Everybody thought he was great,” Holtzman, who died in 2008, told Sports Illustrated in 1992. “But when a relief pitcher gets a win, that’s not good, unless he came into a tie game. Face would come into the eighth inning and give up the tying run. Then Pittsburgh would come back to win in the ninth.” (In five of his wins, Face entered with a lead and left without one.)

So Holtzman set out to create his own definition for the save, with criteria much stingier than Roth’s. In order to be eligible, a reliever had to face the potential tying or winning run, or come into the final inning and pitch a perfect frame with a two-run lead. If neither of those situations applied, there was no save opportunity. In 1960, Holtzman began using this formula to track his version of the stat around the game, and a leaderboard was regularly published in The Sporting News.

Soon, the save was everywhere. Fans heard the term from managers, players, reporters. It just wasn’t always clear what they meant. Holtzman’s definition was popular, but there were teams who evaluated their relievers with a system like Roth’s, and there were those who used a formula in between. Cardinals’ skipper Johnny Keane ignored these definitions and concocted his own—he kept a little black book in the dugout, saying that he made a note “if a pitcher does a good job in protecting the lead,” even if he did not close out the ninth.

The situation led to plenty of questions about statistical rigor, but it led to philosophical reflection, too. What, exactly, was a save supposed to measure? A box score had never before had to grapple with a question quite like this.

The Baseball Writers Association of America decided it had to take action. In 1963, the BBWAA convened a committee to propose an official save statistic, ultimately settling on the original formula from Holtzman, with one tweak—if a reliever had two or more perfect innings with a three-run lead, he could qualify for a save, too.

The BBWAA realized that teams with other statistical formulas might not be thrilled about making the switch, so it didn’t ask for the formula to be implemented right away. Instead, the committee suggested, baseball should use this system for a trial period of one year. If teams wanted to propose changes afterwards, they could do so; then, the save might become official. The American League’s clubs took a vote and agreed to try it. The National League, however, wasn’t so eager. Roth’s Dodgers liked their existing formula, and so did several of their fellow clubs. They didn’t want to change their record books.

But their decision received a wave of bad press—writers put the league on blast for blocking the statistic, and the little number caused a big headache. “This issue has been blown completely out of proportion,” NL publicity chief Dave Grote griped to The Sporting News in May 1964, a month after the season had begun. “It was grossly unfair to brand the National League club public relations directors as ‘uncooperative’ because some of them expressed reluctance to accept the so-called BBWAA proposal for a uniform saves rule.”

Grossly unfair or not, the criticism worked. The NL bowed to the pressure and decided to use the standardized save system for the rest of the 1964 season. The BBWAA had exactly what it wanted—both leagues’ cooperation to track saves under a standard system for the season. But it didn’t end as the writers had hoped. After 1964’s trial period, teams did not move to endorse the save as an official statistic and would not touch it for several years, even as the save continued to spread, in media coverage and salary negotiations and casual conversation.

After 1968’s Year of the Pitcher, however, baseball found plenty to change about pitching. The Playing Rules Committee shrunk the strike zone and lowered the mound, and, from the Scoring Rules Committee, there was one more announced change: For 1969, the save would finally be an official statistic. It wasn’t the BBWAA’s originally proposed formula, though. It wasn’t Holtzman’s, either. Instead, it was Roth’s. The committee was working on its own, without a proposal from the BBWAA, and so they did not have to cater to the writers’ definition. If a reliever entered with a lead, finished with a lead, and could not be credited with the win, he’d earn a save.

This formula was far more liberal than the previous proposal, which triggered steady frustration, even from the relievers who found the most success under the loose criteria. After Tigers reliever John Hiller set a record with 38 saves in 1973, he criticized the metric: “The way things stand, there’s no way a relief pitcher can show what kind of year he’s had . . . Some saves are very important. Some are ridiculous.” The Mets’ Tug McGraw finished in the top five for saves in both 1972 and 1973, but he announced that he found the official statistic so useless that he had invented a different system for contract negotiations. “If I enter a game in relief and the bases are loaded and I leave all three of those runners stranded, I get three plus marks. If one scores and I strand the other two, I get two plus marks and one minus,” he explained. “At the end of the season, you total up the marks... It gives you a clearer picture of what kind of a job the relief pitcher really has done.”

So for 1974, the scoring rules committee decided to change. To earn a save, a reliever would henceforth have to face the potential tying or winning run, or pitch at least three perfect innings to preserve a lead. It was closer to the definition that had originally been proposed in 1964. This didn’t mean that it was widely embraced, however. Writers were upset that a drastic change had been made so quickly, without outside consultation, and relievers were frustrated that their core stat had been made so difficult to earn. “The baseball scoring rules committee, in its infinite wisdom, has changed the definition of a ‘save’ for relief pitchers without doing any research whatever,” snipped an editorial in The Sporting News.

Unsurprisingly, save totals plummeted. In 1973, 42% of games ended in a save. After the switch in ‘74, the rate fell to 27%.

It was maddening for relievers, confusing for fans, and embarrassing for the rules committee. For 1975, the BBWAA formally proposed another change to the statistic, requesting the third official save formula in three years. Its Goldilocks solution was more reasonable than ’74’s, less generous than ‘69’s. Under this definition, a pitcher would be credited with a save when:

• He was the finishing pitcher;

• He was not the winning pitcher;

• And he met one of three conditions: a) he entered the game with a lead of no more than three runs and pitched for at least one inning, b) he entered the game with the potential tying run either on base, at bat, or on deck, or c) he pitched effectively for at least three innings.

The committee took this one seriously, and it was approved, with oversight from the Elias Sports Bureau. (The Bureau expressed concern over the word “effectively” in the last clause, worrying that it might be too vague, but it stayed.) Baseball finally had its save—not Roth’s, not Holtzman’s, but something entirely different—and this one stuck, albeit not always smoothly.

Look no further than one of the first relievers to issue a verdict. “It’s not only sports writers and fans who don’t understand what relief pitching’s all about,” fumed 1974 NL saves leader and Cy Young winner Mike Marshall. “The Lords of baseball obviously don’t understand either—the ones who make the rules.” Decades later, this version of the save is still here, and so, too, is the debate over just who understands how well it really works.